The Mismeasure of Models

December 24, 2022

A worried friend recently sent me an article by Vice suggesting that humanity is nearing its demise. The focus of the piece is an update to a 1972 study by MIT scientists, Limits to Growth (LtG), which warned of the collapse of industrial civilisation by 2040. “The most probable result will be a rather sudden and uncontrollable decline in both population and industrial capacity,” warn the authors. The LtG study created a computational model called World3 which predicts our civilisational trajectory based on current trends such as economic growth and environmental limitations. The update revalidates the findings and charmingly warns us that “it’s almost, but not yet, too late for society to change course.”

While LtG received more public attention than most — it is the rare study which has its own wikipedia page — it’s not alone in its dire predictions for the future of humankind. In 1989, Richard Duncan advanced Olduvai theory, which states that industrial civilisation will last until approximately 2030, after which “world population will decline to about two billion circa 2050”. The discipline of cliodynamics is “turning history into an analytical, predictive science” and employs dynamical systems to model human violence. It predicts inescapable cycles of violence approximately every 50 years. Models based on predator-prey dynamics which warn that societal “collapse is difficult to avoid” continue to be published .

Like LtG, these predictions are based on some sort of mathematical model. Such models rely on a set of variables which interact with each other in predictable (albeit perhaps complicated) ways. Using known or projected values for the variables today lets us make predictions about their values tomorrow, and so on.

The infamous “Malthusian trap,” for instance, is based on two variables: food production and population size. Its namesake, Thomas Malthus, assumed that food production grew linearly, while population size grew exponentially. That is, the population grew faster than our ability to produce food. With these assumptions, it is inevitable that famine would occur, since food production could not possibly keep pace with population growth.

Of course, Malthusianism was dealt a near fatal blow by the green revolution, which drastically increased our ability to grow food. The technology responsible for the green revolution — GMOs, efficient fertilizers, mechanized irrigation, and so on — could not have been foreseen by Malthus, and was thus not included in his model. The omission has nothing to do with Malthus himself — it is a problem with all models. They cannot rely on technology which does not yet exist. More generally, they cannot rely on relationships between variables that their creators did not imagine.

Models exist in what Jimmy Savage called “small worlds.” These are worlds where all inputs and outputs are known, as well as the rules governing all interactions. There are no unknown unknowns. The closer a phenomenon is to a small world, the better luck we’ll have with mathematical modeling. This is the case in physics and chemistry, for instance, where we’re typically interested in the behavior of small, isolated systems. As you broaden the phenomenon of interest, models become less useful. And when you attempt to predict the entire future, models hit peak futility.

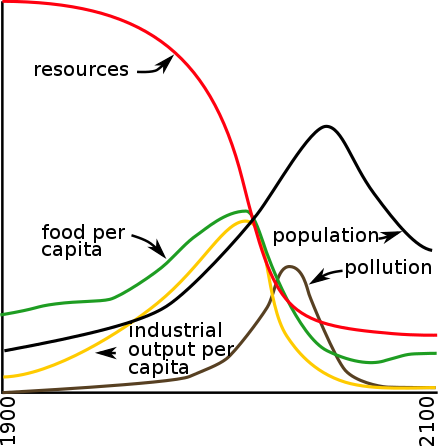

The World3 model of the LtG study is based on five variables: resources, food per capita, industrial output, population, and pollution. The following figure demonstrates an example run.

World Model Standard Run as shown in The Limits to Growth. Credit: YaguraStation

There are undoubtedly lessons to be drawn from LtG and the World3 model. But to rely on the interaction of five variables to tell us that the world is ending betrays a misunderstanding of the capabilities of models. Moreover, many of the decisions made by the LtG authors have been severely criticized. For instance, the climate scientist and policy analyst Vaclav Smil found several of the assumptions rather dubious:

Those of us who knew the DYNAMO language in which the simulation was written and those who took the model apart line-by-line quickly realized that we had to deal with an exercise in misinformation and obfustication rather than with a model delivering valuable insights. I was particularly astonished by the variables labelled Nonrenewable Resources and Pollution. Lumping together (to cite just a few scores of possible examples) highly substitutable but relatively limited resources of liquid oil with unsubstitutable but immense deposits of sedimentary phosphate rocks, or short-lived atmospheric gases with long-lived radioactive wastes, struck me as extraordinarily meaningless.

The nobel laureate William Nordhaus put it more succinctly: “It is hardly surprising that dead rabbits are pulled out of the hat when nothing but dead bunnies have been put in.” In fact, an entire book — Models of Doom: A Critique of the Limits to Growth — was published in response to LtG.

None of this is to say that modeling future scenarios is useless. Far from it. Such analyses can help us prioritize problems and flag worrying trends. Even Malthus’ basic model was useful: it highlighted that food production should be a core concern. More recently, the IPCC’s scenarios have been enormously helpful in navigating our policy and technological responses to climate change. But there is a reason the IPCC releases updates, and provides a range of different predictions. They must adapt to a changing world, and different assumptions lead to different outcomes. The IPCC reports are thousands of pages long because they are careful to model a huge variety of different scenarios, and it is never claimed that any of the scenarios represent what will certainly happen.

Models should thus be seen as guideposts to help us navigate new problems, not as infallible prophets endowed with the ability to foresee the end of civilization. Often, the role of models is well understood, and the inherent uncertainty is accounted for. But there can be severe misunderstandings when the results are translated out of scientific reports into normal language for public consumption. In particular, the assumptions are often left unstated.

Sometimes this mistranslation is the fault of those developing the model. They might believe that the underlying message is so important that nuance is best left at the door. Sometimes it’s driven by activists who have an interest in persuading the public that their cause is the only thing worth worrying about.

Regardless of who’s at fault, the result is a plethora of apocalyptic predictions which signal a fundamental confusion about how these models are developed. In 1968, a Malthusian-like model led Paul and Anne Ehrlich — professors at Stanford University — to predict that “[i]n the 1970s hundreds of millions of people will starve to death in spite of any crash programs embarked upon now”. Denis Hayes, the chief organizer of Earth Day, declared in 1970 that “it is already too late to avoid mass starvation.” In the same year, the professor Peter Gunter wrote that “By the year 2000, thirty years from now, the entire world, with the exception of Western Europe, North America, and Australia, will be in famine” (emphasis in original).

Predictions based on models should all be conditional statements. That is, they should be of the form: If the assumptions of the model are met, then this scenario will occur. Most apocalyptic predictions, by their very nature, are unconditional. They simply state: This scenario will occur. But models are inherently conditional — they are only as good as their assumptions. This lack of nuance gives the public the impression of a much more certain prediction than is warranted, often with much bleaker outcome.

Over-claiming the power of models to foresee the future has detrimental effects on public trust. How many times can you claim the world is ending before people stop listening? Moreover, the constant pessimism erodes our belief that progress can be made. Pessimism is a barrier to progress. While models are useful for gaining perspectives on our problems, they are only a map of the territory, not the territory itself. As Smil notes, “those who put models ahead of reality are bound to make the same false calls again and again.”

Back to all writing

Subscribe to get notified about new essays.