The era of thinking about thinking

November 30, 2024

Here are four trends that I’m excited about (some older than others, but all ongoing):

- Improving our ability to remember and digest the information that we read, with tools like Anki flashcards based on the idea of spaced repetition.

- Improving our note-taking technology, with tools like zettelkasten, digital gardens, and second brains.

- Improving our ability to efficiently search information space. Here the tools range from search engines (older) to large language models (newer).

- Improving programming productivity and collaboration. Tools range from code production environments like VS Code and cursor, to code sharing tools like Github, to code generating tools like co-pilot and LLMs.

What connects all of these areas?

They are all focused on making us more efficient about information consumption and production. They are tools for thought—technology for helping us connect our thinking, spot patterns in our ideas, produce and spread knowledge more quickly, and surf the world of information more easily. They might all be considered advances in metascience: the study of how to improve the technology and institutions in charge of creating and disseminating knowledge1.

I suspect these trends will only become more prominent over time. They are solving a problem that earlier societies did not have, or at least did not have to the same extent we do. The more dynamic and open a society, the more ideas it produces—from science and mathematics to art and literature. And the more ideas produced, the more technology is needed to remember, find, spread, manipulate, and digest those ideas.

While earlier societies made progress, the rate of progress was slower and the depth of knowledge shallower. If you go back far enough, it was possible to know most of what had been written about even fairly broad subjects, perhaps even to personally know those who had written it. There were only a handful of prominent philosophers in ancient Athens (Socrates, Plato, Aristotle, Epicurus, and a few others), economics in the 1800s was dominated by a few figures (Smith, Ricardo, Galiani, and arguably Bentham, Mill, and Marx), and even 20th century physicists were a relatively small community.

But now there are tens or hundreds of thousands of people interested in most fields, from credentialed researchers to bloggers. And it’s easy for all of them to upload their newest idea to the internet. We’re swimming in ideas. The number of formal scientific papers has exploded (e.g., the arXiv alone went from receiving roughly 50 monthly submissions in 1991 to over 24,000 in October 2024), let alone the number of informal books, blogs, and podcasts.

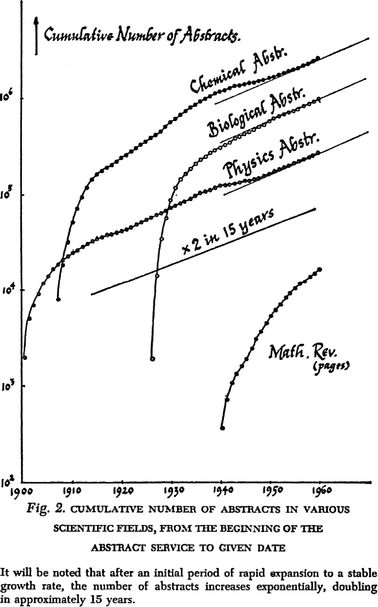

A figure from Derek J. de Solla Price’s 1963 work on tracking the number of scientific publications over time, Little Science, Big Science. Price was one of the first people interested in tracking these trends.

You can no longer read everything published about a topic, unless you drastically narrow the subject matter down to sub-sub-sub-field. One can barely keep up with the publications of a handful of journals in microeconomics, let alone economics as a whole.

This puts pressure on discovering new ways to organize this information, both personally and institutionally. This pressure is what originally led to the invention of journals, which were circulated in order to keep scholars informed about developments in various fields. Some of the earliest journals were The Philosophical Transactions of the Royal Society, The Proceedings of the Royal Society, and Annalen der Physik (all still active).

This pressure also led to correspondence networks between academics so that they could tell each other about new work. Famously, Charles Darwin sent more than 7,500 letters and received more than 6,500; Einstein sent more than 14,500 and received more than 16,000. It also led to organized meetings and conferences between those working in the same area. The Solvay conference of 1911 was the first international conference on physics and chemistry, bringing together the few dozen people who were working on radiation and quanta.

The first Solvay conference, held in 1911 at the Hotel Metropole in Brussels. Participants included Solvay, Lorentz, Curie, Poincaré, Planck, de Broglie, Rutherford, Langevin, and Einstein.

While we still have journals and conferences, more is needed to stay apace with all the ideas floating around. And that’s where the four trends come into play. We’re learning how to remember more about what we read, organize our knowledge more efficiently, and find information more easily. (The fourth trend may at first seem unrelated, but programming has become such a key part of so many fields, that advances here translate to advances everywhere.)

Part of my excitement is purely personal. Nerds like me who want to Know Things have never had it better. We can sit in front of our computers and learn about everything from the French revolution and tort law, to general relativity and art history.

But more importantly (and more loftily), I think these trends hold part of the key to fighting the great stagnation—a period of slow economic growth starting in the early 1970s. A lot of bad things happened in the 1970s, but part of the great stagnation was a slowing of innovation and scientific progress. I’m not going to get into the history of and evidence behind this claim here, instead you can read Scott Alexander talk about it and show you fancy graphs to make the point.

There are several hypotheses for why this is the case. Some cite institutional and cultural reasons, from pessimism and an anti-progress ideology, to the distorted incentives created by funding agencies who are only willing to fund safe research. Others cite the low-hanging fruit hypothesis, the idea that the biggest and most fundamental discoveries tend to happen earlier, and as time goes on good ideas get harder to find. You can only discover that DNA has the shape of a double-helix once—the next insight is going to be fundamentally harder than this.

I lean closer to the culture argument. (I side with Adam Mastroianni.) The low-hanging fruit hypothesis strikes me as a priori implausible because it misrepresents the discovery process. It treats knowledge generation as static, assuming that the set of ideas is fixed and that we’re simply trying to grab the next one within reach. But the next idea—whether in science, art, or literature—is a function of what questions we’re asking. And the questions we ask change constantly, not least because of what we learn. New knowledge suggests new questions and adds new fruit to the tree.

But there’s a catch: new questions may not be entirely within the original field of inquiry. This is because everything is related: we’ve erected artificial boundaries between scientific fields purely as a matter of administrative convenience. But if we take EO Wilson’s idea of consilience seriously, then answering a question in physics can (and will!) have ramifications in chemistry, biology, mathematics, and even the humanities and social sciences.

The slowing of scientic progress is arguably a byproduct of hyper-specialization. Physicists do only physics, biologists only biology. And, of course, ideas get harder to find if you’re always asking the same questions. But if you’re open to new questions at the frontier between fields, then there will always be new low-hanging fruit to pick. To recognize these new questions, however, one has to be aware of the connections between domains of inquiry. And that’s what these four trends are doing—helping us recognize new relationships by helping us productively interact with and digest a wealth of information.

-

Metascience typically refers to studying formal scientific institutions like peer-review, grant-making agencies, and universities. But the spirit of metascience is to accelerate knowledge production, and there’s no reason the term should not be applied to innovations that help individuals create knowledge. ↩︎

Subscribe to get notified about new essays.